Using a robot to help family members look after seniors living alone

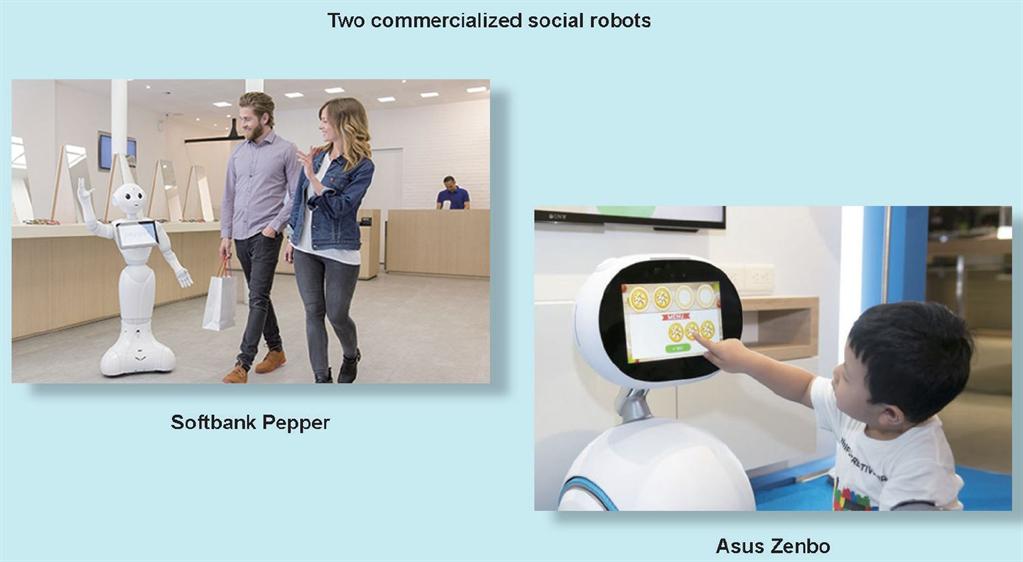

With a large portion of Taiwan’s population becoming aged, Dr. Yang aims to investigate the feasibility of applying video summarization techniques using a social robot to help family members look after seniors living alone. The setting is a home where an elderly person lives alone, whose family members are willing to care for the person but too busy to assist every day. A household robot will help if it can follow the senior, continuously pay attention to its master, notify family members in an emergency, and create a brief video summary of the senior’s daily activities. However, in reality, such a feature is currently unavailable. Figure 1 shows two commercialized social robots, Softbank Pepper and Asus Zenbo. Although the robots can be easily purchased, they cannot perform high-level intellectual actions due to hardware and software limitations. Regarding the hardware issue, due to limited battery capacity and cost considerations, both robots are equipped with low-cost ultra-low-voltage CPUs (central processing units), resulting in insufficient computing power to process complex calculations on enormous amounts of data, such as those gathered for video analysis. Regarding the software issue, although action recognition and video summarization have been rapidly improved, most state-of-the-art algorithms are based on CNNs (convolutional neural networks) and require powerful processors, particularly GPUs (graphics processing units) to speed up computation. However, neither Pepper nor Zenbo has a GPU.

Proposed method

To overcome the limitations of computational resources, Dr. Yang uses a standalone machine equipped with a high-performance CPU and GPU as a server to expand the computing power of a social robot, as shown in Figure 2. With abundant computational resources, Dr. Yang aims to develop a group of three related algorithms towards a common goal. As illustrated in Figure 3, the base of the approach is an algorithm that enables the robot to detect and track a human such that the robot can follow and watch an elderly person. Assuming this part is sufficiently robust, the robot will generate videos consistently featuring a human at the center of each frame. Dr. Yang then plans to apply existing action recognition and video summarization algorithms to generate action labels and summarized videos.

Developments

Dr. Yang’s current progress includes the development of programs for detection and tracking, a few sanity tests for action recognition, and a literature survey of video summarization. For detection and tracking, Dr. Yang developed an Android app running on a Zenbo robot since Zenbo’s operation system is a customized version of Android 6.0. The app turns on Zenbo’s camera, gathers captured video frames, converts the frames’ color space, and compresses the frames into the JPEG (Joint Photographic Experts Group) format to reduce data size and transmission lag while sending compressed frames to the server. Regarding server size, Dr. Yang developed a Linux program featuring an HTTP (Hypertext Transfer Protocol) service component to communicate with the robot. Once it receives a request, the program restores the compressed image and calls a human pose recognition library OpenPose (Cao, Simon, Wei, & Sheikh, 2017) to detect key points of the human body, as shown in Figure 4. In the early stages of his research, Dr. Yang utilized two other detection algorithms—a deformable, shape-based pedestrian detection algorithm (Dalal & Triggs, 2005) and YOLO, a CNN-based real-time generic object detection algorithm (Redmon, Divvala, Girshick, & Farhadi, 2016). Dr. Yang found that the manner in which the algorithms represent a human body as a bounding box is insufficient because the locations of the body parts should be known so that the robot’s movement can be enabled and the camera orientation can be controlled.

Once it receives an HTTP response from the server containing a group of keypoint coordinates, the Zenbo-side app computes a group of parameters—a new neck yaw angle, a new body rotation angle, and a new location of the body—and calls the robot’s API (application programming interface) to set them to track the user, i.e., keeps the human in the center of the following video frames. The challenge of this computation lies in determining how to generate adequate new parameters from current parameters plus an image. Thus, Dr. Yang has simplified the algorithm by extracting key points of the human body from an image and applied a rule-based approach to compute the new parameters: if the user is far from the robot such that most of the key points are visible, the algorithm keeps the upper body in the center of the frame. If the user is located at a fairly short distance from the robot, the algorithm tracks the user’s face, i.e., keeps the nose in the center of the frame. Otherwise, if the user is too close to reveal any facial key points, the algorithm increases the robot’s neck yaw angle until it finds a face. The approach is easy to implement but difficult to tune because all parameters are manmade rather than learned. It would be interesting to apply regression or machine learning methods to generate adequate new parameters in future studies.

For action recognition, Dr. Yang found the dataset Charades (Sigurdsson, et al., 2016) highly useful because all videos in the dataset are taken at home. The dataset is publicly available, highly diverse, and richly compiled, covering a long list of action labels. Dr. Yang applied an action recognition algorithm (Simonyan & Zisserman, 2014) trained on the Charades dataset to videos captured by a Zenbo robot and found that the performance did not drop. Thus, it is feasible to exploit the existing dataset by transferring domain knowledge. However, Dr. Yang also identified two differences in the assumptions made by the Charades dataset and his research setting: all video clips in the Charades dataset are short and cover the full human body because they are captured by humans, whereas in Dr. Yang’s setting, video clips are long and perhaps free of humans because they are captured by a robot. Dr. Yang found that existing action recognition algorithms still generate action labels even though there is no person in the frame, which is caused by the mechanism by which those algorithms learn features not only from a human but also from surrounding objects. It would be interesting to address this problem and improve it by integrating human and object information in future studies.

Summary

This research is ongoing, and its motivation, approach, and current progress are reported here. Dr. Yang aims to add features to social robots to help family members look after their aged parents living alone, but social robots may not have sufficient computational resources. Thus, Dr. Yang has established a powerful server to process transmitted image data by applying state-of-the-art pose-detecting algorithms to find human body landmarks to control the robot’s movement and camera orientation for human tracking. Dr. Yang plans to integrate action recognition and video summarization algorithms to detect hazards to the safety of elderly persons and create video summaries for the convenience of their remote family members.

Figure 1. With maturing technologies and decreasing costs, intelligent robots will gradually appear around us to provide services we need. Left: A Softbank Pepper robot says hello to customers in a lobby. Right: An Asus Zenbo robot hosts an educational game on its touch screen for a young child.

Figure 2. We amplify the limited computational resources available on a robot by incorporating a powerful computer equipped with a high-performance CPU and GPU and large storage and memory capacities. We transmit data between the robot and the computer via a wireless connection to ensure the robot’s movement capability.

Figure 3. Since the camera used in this research is installed on a social robot and the user is mobile, it is necessary to have a robust detection and tracking method to keep the user in the center of captured frames for further analysis. Then, an action recognition algorithm is applied to find important human actions in captured video. Finally, the algorithm removes uninteresting sections from long videos and creates a short and information-rich video summary for a better watching experience. The study aims to develop algorithms to complete the three steps automatically to reduce human effort.

Figure 4. Two representations of a human body detected from a video frame are shown here. Key points carry richer information than a bounding box and work better in controlling a robot’s camera orientation and movement.

References

1. Cao, Z., Simon, T., Wei, S.-E., & Sheikh, Y. (2017). Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Conference on Computer Vision and Pattern Recognition.

2. Dalal, N., & Triggs, B. (2005). Histograms of Oriented Gradients for Human Detection. IEEE International Conference on Computer Vision.

3. Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You Only Look Once: Unified, Real-Time Object Detection. IEEE Conference on Computer Vision and Pattern Recognition.

4. Sigurdsson, G. A., Varol, G., Wang, X., Farhadi, A., Laptev, I., & Gupta, A. (2016). Hollywood in Homes: Crowdsourcing Data Collection for Activity Understanding. European Conference on Computer Vision.

5. Simonyan, K., & Zisserman, A. (2014). Two-Stream Convolutional Networks for Action Recognition in Videos. Conference on Neural Information Processing Systems.

Chih-Yuan Yang

IoX Center